CRDs Killed the Free Kubernetes Control Plane

Today, Google announced that it is killing the free control plane for GKE clusters, except for one free zonal cluster per billing account. Let’s look at the timeline of events (source: release notes archive):

- June 2015 (k8s 0.19.3) - Kubernetes master VMs are no longer created for new clusters. They are now run as a hosted service. There is no Compute Engine instance charge for the hosted master.

- End of 2015 (k8s 1.0.x) - $0.15 per cluster per hour over 6 nodes, free for clusters 5 nodes and under

- November 2017 (k8s 1.8.x) - Eliminated control plane management fees

- Starting June 6, 2020 (k8s 1.17.x?) - $0.10 per cluster per hour, except for one free zonal cluster per billing account. Also adds SLA if using default version offered in the Stable channel

This post explores how Custom Resource Definitions (CRDs), which were introduced at v1beta1 in Kubernetes version 1.8 and v1 in 1.16 have been used & abused, and killed the free Kubernetes control plane.

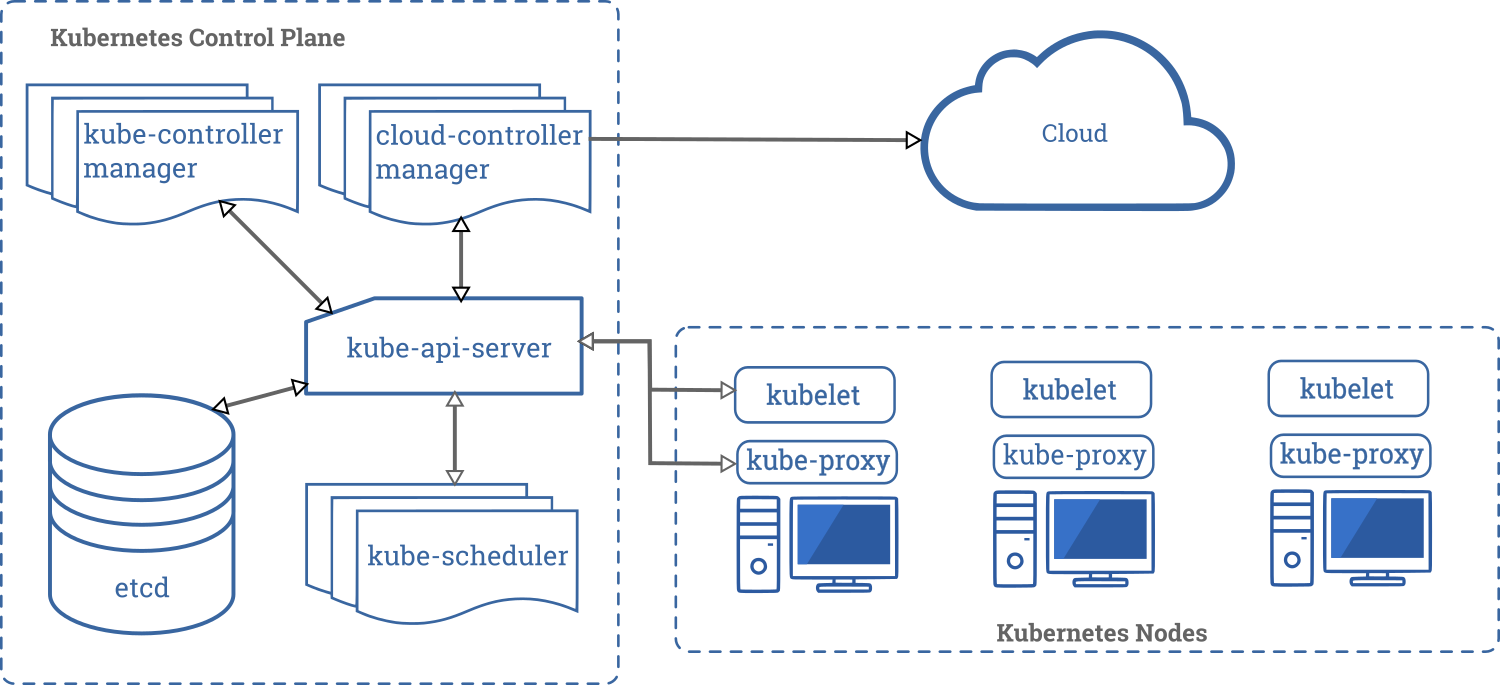

Component Review

Let’s start by reviewing the components of the Kubernetes control plane. API resources are backed to a highly-available data store, typically etcd. Next to that, there are 3 components: kube-apiserver, kube-scheduler, and kube-controller-manager. Finally, cloud providers run their specific version of cloud-controller-manager to create external resources such as Load Balancers.

Main API Resources pre-CRDs

Prior to CRDs exploding in popularity, most of the main API resources created roughly map to revenue-generating activities:

Pod- consumes resources on a nodeDaemonSet,Deployment,ReplicaSet- create manyPodsService- consumes bandwidth and may also create internal/external L4 load balancerIngress- may create L7 load balancerPersistentVolume,PersistentVolumeClaim- may create cloud storage

There were a couple resources used to store config data that would tax the API Server when read/written, but generally usage was low:

SecretConfigMap

Cloud providers could generally subsidize the control plane by offering it for free, since increased load on the control plane meant increased revenue-generating activities.

Enter CRDs

How exactly do CRDs change the equation? Well, like Secret and ConfigMap, a CRD can tax the API Server and backing data store, without directly mapping to a revenue-generating activity. By definition:

A custom resource is an extension of the Kubernetes API that is not necessarily available in a default Kubernetes installation. It represents a customization of a particular Kubernetes installation

How many CRDs do some popular services use?

- cert-manager: 6 CRDs (in version 0.13)

- istio: 53 CRDs (in version 1.2)

CRD Popularity Explodes

If I had to take a guess at when CRDs took off, I’d say it was when the Operator Framework was introduced in May 2018 and quickly gained traction. Operators are essentially CRDs paired with a controller that watches CRDs and responds to events.

For better or for worse, the community started to use CRDs. Lots of them. OperatorHub has over 100 pre-built Operators that can be installed with minimal effort, and most of them will create many CRDs and a controller.

Each CRD takes up space in the backing data store, and each controller running a watch loop taxes the Kubernetes API server. Small clusters can now create big loads on the Kubernetes control plane.

More Small Clusters

I’ve noticed a trend where teams spin up new clusters for each application. One application may be built around cert-manager and ingress-nginx. Another application may be built around istio and a different version of cert-manager.

Since CRDs are installed on the cluster level, it is not possible to namespace resource versions (and that’s unlikely to happen soon). It is easier for teams to take the cluster-per-application approach as opposed to mandating a specific version of cluster tooling. Especially with the rate at which the ecosystem is moving.

More small clusters means more control planes, and more subsidizing if a cloud provider is giving away the control plane.

Some Applications Store App Data in CRDs

If you give a developer a free, highly-available, extensible datastore, they’re going to want to use it. For everything. There are applications out there that are storing their complete state in CRDs. Please do not do this. The Kubernetes API server is not a general-purpose datastore.

Use PostgreSQL. Use SQLite. Use Redis with persistence.

I am currently trying to figure out how to stand up a local Docker development environment for an application that is using CRDs as it’s primary datastore, and it’s a nightmare. postgres:alpine is 73MB. redis:alpine is 30MB. SQLite can be compiled into your app at 500KB. Running Kubernetes in Docker with kindest/node is 1.23GB!

The Ship Keeps Sailing

Kubernetes is a remarkable project, influenced by hundreds of corporations and thousands of individual contributors. It has gained a ton of features since 1.0, and is more flexible than ever.

But the ability to store whatever you want in the control plane comes at a cost. And today, Google decided to pass that cost on to the consumer.